About Me

My research topic of interest during my PhD includes Robust Control, Human-robot interaction, Optimization, Model Predictive Control and Reinforcement Learning in Robotics. Throughout the past years, I have worked on multiple research projects surrounding the topic of Designing Integrated Strategies for Modularized Robotics/Mobility Systems including self-driving vehicle in Uncertain Environments. My current work focuses on Motion Planning, Behavior Planning, Fleet Management System in Autonomous Mobility.

Experiences

Senior Motion Planning Engineer

Dec. 2023 - Present · Motion Team · Autonomous Robot 24Hours 7Days (AR247)

- Designed motion planning strategies for heavy AMRs and validated them through high-fidelity simulations before deployment.

- Trained and evaluated RL-based behavior policies within the simulation loop to enhance decision-making robustness.

- Bridged simulation and real-world operation by integrating planning/control pipelines, improving safety and operational efficiency.

- Developed fleet monitoring, mission planning, and operator dashboards for large-scale system operation.

- Built scenario-driven frameworks to evaluate multi-robot path planning and conflict resolution.

Postdoctoral Researcher

Agu. 2021 - Dec. 2023 · Robotics & Mobility Lab. (RML) · Ulsan National Institute of Science and Technology (UNIST)

- Researched and developed interval-prediction, RL, Deep RL and tree-based planning methods for uncertain and complex driving scenarios.

- Built simulation environments to benchmark rule-based and RL decision-making.

- Integrated planning/control algorithms into MORAI via ROS bridges with Hardware-in-the-loop (HIL) for smooth real-robot deployment.

- Explored Carla simulator for testing local and behavior planning algorithms.

Graduate & Postdoctoral Researcher

Sept. 2017 – Feb. 2021 · Fluid Power & Machine Intelligence Lab. (FPMI) · University of Ulsan (UOU)

- Applied model-based RL and predictive control for safe collaborative human-robot interaction.

- Developed adaptive optimal estimators and addressed network delay/dropout issues in robotic systems.

- Implemented and validated control and motion planning algorithms integrating simulation with HIL experiments.

- Developed renewable and sustainable energy system control projects (Fluid‑based Triboelectric Nanogenerator; Floating Offshore Wind Turbines) to optimize as well as maximize power captured.

Lecturer

Sept. 2015 – Jul. 2017 · Electrical and Electronic Engineering Dept. (EEE) · Thu Duc College of Technology (TDC)

- Taught courses in microprocessor programming, C/C++, robotics, and control theory.

Technical Engineer & Graduate Researcher

Sept. 2012 – Jun. 2016 · Ho Chi Minh City University of Technology and Education (UTE)

- Designed and deployed embedded control systems for automated parking and industrial production lines.

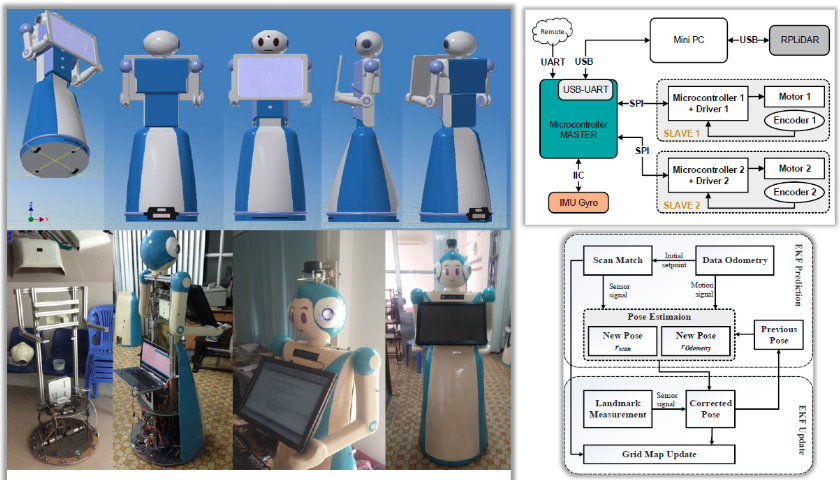

- Researched and developed Motion Planning and Control Algorithms for an Autonomous Reception Robot.

Education

Ph.D. in Mechanical and Automotive Engineering

Sept. 2017 - Feb. 2021 · University of Ulsan (UOU)

M.S. in Mechatronics Engineering

Sept. 2014 - Jun. 2016 · Ho Chi Minh City University of Technology and Education (UTE)

Thesis: Motion Planning and Control Algorithms for an Autonomous Reception Robot.

B.S. in Electrical and Electronic Engineering Technology

Sept. 2009 - Jul. 2013 · Ho Chi Minh City University of Technology and Education (UTE)

Publications

Projects

Fleet Autonomous Mobile Robot

These robots, equipped with steering-based locomotion mechanisms, have been deployed for security patrol tasks in public areas and construction sites, as well as for logistics operations in industrial environments. They autonomously navigate complex, shared internal roadways populated by humans and other vehicles such as trucks and forklifts. This project is also designed based on Conflict-Aware Dispatcher for deployment in large factory environments where AMRs operate on shared internal roads (indoor + outdoor). The system enables coordinated operation among robots and seamless interaction with web-based tools and human operators. It serves as the central control layer for scalable, safe, and intelligent fleet management—laying the foundation for smart factory automation. I was responsible for several core features, including:

- Build and maintain a comprehensive simulation environment for testing and validating algorithms.

- Behavioral Planning for real-time decision-making, including obstacle avoidance and speed profile planning.

- Global Navigation, tailored to scenario requirements and factory map data.

- Local Trajectory Generation Algorithms to ensure smooth and feasible motion control.

- Collision Risk Assessment and Safety Validation to guarantee reliable and safe navigation.

- Task Scheduling and Dispatching: Dynamically assigns schedules and inspection tasks to each robot based on real-time conditions and system priorities.

- Multi-Robot Path Planning and Conflict Resolution: Manages priority-based rerouting and waiting strategies to prevent congestion and resolve conflicts in real time, especially in narrow roads and intersections shared by multiple robots.

- Monitoring and Visualization: Collaborated with the front-end team to integrate a web-based dashboard that supports real-time, bidirectional messaging and logging database. It provides live updates on robot statuses, and task progress, enabling operators to remotely supervise the entire fleet.

Beebot

Education Robot Platform (ERP42)

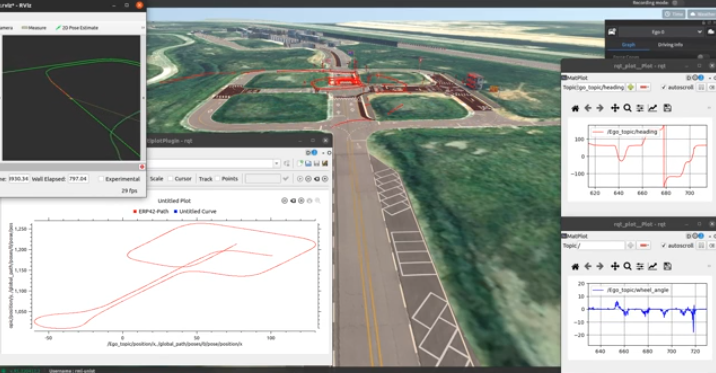

I worked with the Educational Robot Platform (ERP) developed by WeGo Robotics, which was integrated into the MORAI simulation environment for autonomous driving research and development. Using this platform, I implemented and tested core motion planning components, specifically:

- Implemented local planning and motion tracking algorithms in the MORAI simulator.

- Integrated locally developed algorithms into the server-based simulation environment via the bridge node.

- Developed a hybrid control framework for concurrent simulation and real robot operation, using simulation outputs to drive the physical robot. To support development and evaluation, I published key planning and tracking data to ROS topics, allowing for real-time visualization, logging, and further analysis. This setup provided a practical and extensible environment for experimenting with and validating autonomous driving algorithms on a compact, education-oriented platform.

On The Way Env. (OTW)

Human-Robot Teleoperation (HRT)

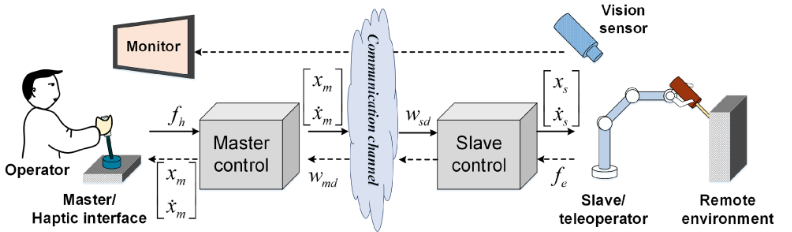

Human-Robot Teleoperation (HRT) is the focus of my Ph.D. thesis project, developed using MATLAB and supported by a series of published research works. It aims to establish a reliable and responsive teleoperation framework that balances both human control and robotic autonomy. The project addresses four key aspects:

- Robustness: Stable performance despite uncertainties from the operator, environment, or sensors.

- Performance: Effective task execution with speed and accuracy.

- Perception: Enhancing the operator’s awareness of the remote environment.

- Transparency: Accurately reflecting the environment and robot state to the operator. To find further details in my thesis defense presentation available in the Education section.

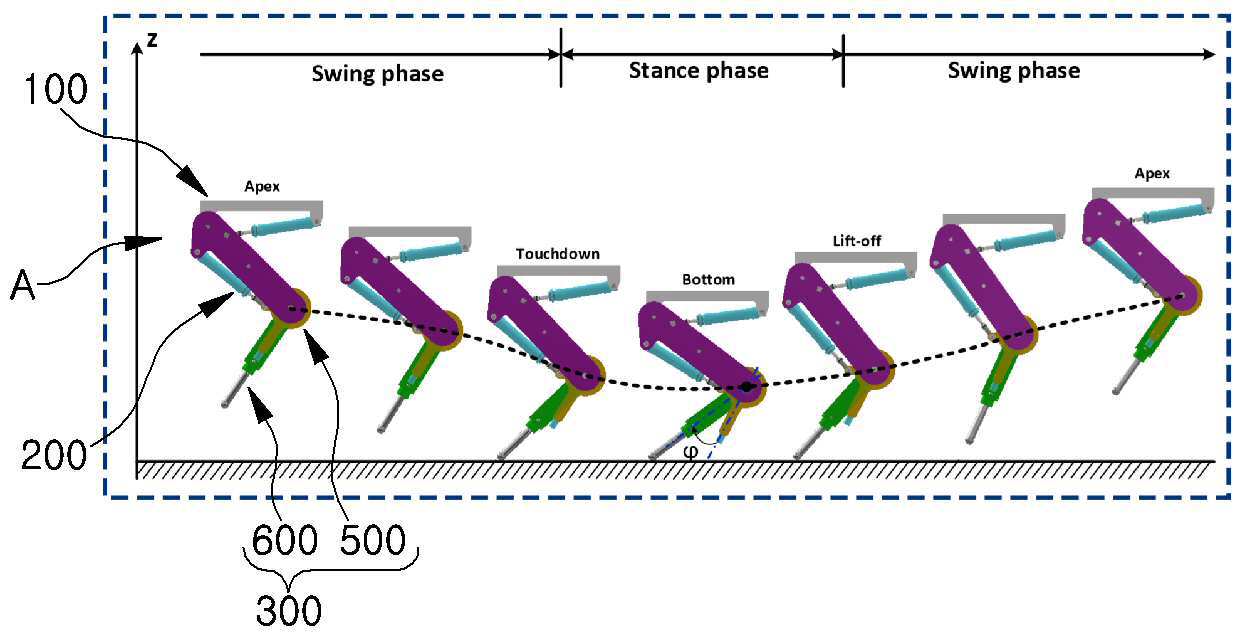

Variable Stiffness Actuator (VSA)

This project designs and controls a Variable Stiffness Actuator (VSA) for quadruped robot legs to enhance adaptability, shock absorption, and energy efficiency. The VSA consists of two actuators: one controls joint position, the other adjusts stiffness by modulating an elastic element. This allows real-time tuning of joint compliance independently from position. The control system includes:

- A high-level controller that plans motion and desired forces,

- A low-level controller that adjusts both position and stiffness using sensor feedback. By integrating VSA into each leg, the robot can achieve softer landings, better stability on uneven terrain, and improved safety when interacting with humans. This approach mimics animal-like locomotion and is ideal for dynamic and unpredictable environments.

Reception Robot

Technical Skills

[Programming & Algorithms] C/C++, Python, MATLAB, Reinforcement Learning & Optimal Control. [Simulation & Development Tools] RViz (ROS), MORAI, Gazebo, Carla, MATLAB Simulink, Qt, Gym, Pygame, Git, Docker, Linux. [Middleware & Cloud]: MQTT, REST API, ROS/ROS2, Modbus, AWS.